For the PDF version of this report, click here.

At the start of 2013, the Virginia Coalition for Open Government released its survey of city and county budgets online called “How Many Clicks to Get to Your Budget?”

Based on the 2012 local budget cycle, the survey measured how easy it was for a citizen to find a locality’s current fiscal year budget on the locality’s website and how easy it was to use and understand that budget document.

Based on information provided by VCOG, Dr. Quentin Kidd, director of the Judy Ford Wason Center for Public Policy at Christopher Newport University, developed a data collection sheet that CNU students used when they visited randomly assigned local government websites. VCOG reviewed the results for each locality and compressed the data into 10 questions to be asked and answered for each site. Each answer was awarded a certain number of points, with a top possible score of 50. Extra credit points were also available. All 35 independent cities and 99 counties in Virginia were assigned letter grades to correspond with their point total.

The grades covered the whole range, from a high of 50 to a low of 0: A+ to F.

18 received grades from A- to A+

43 received grades from B- to B+

35 received grades from C- to C+

12 received grades from D- to D+

11 received an F for scores from 1 to 26

15 received an F for a score of 0

Looking Back

The letter accompanying the survey’s release to the press stressed that the results were not intended “to embarrass any locality or even to scold them.” Nonetheless, early media coverage of the survey focused on the number of localities that received failing grades.

“26 localities get failing grade on budget access,” proclaimed the headline in no fewer than eight newspaper and television stations in the day or two after the survey’s release. (At right, headline from the Virginian-Pilot‘s online version of the story, Jan. 5, 2013)

After that, though, coverage settled into more localized reviews of the grades that included quotes from government officials and VCOG, as well as overviews of the survey as a whole.

At least 37 print and broadcast news outlets covered the story, from Wise County to Norfolk, Northern Virginia to Halifax and everywhere in between.

There were a couple of missteps along the way. The City of Alexandria contacted VCOG to point out both a shorter pathway of clicks, as well as an alternative format for the budget the city did not receive credit for. Given credit for those, Alexandria would have received an A- instead of a B.

In Hopewell, VCOG and CNU students inadvertently used an outdated website address, which at the time nonetheless appeared at the top of the search results on Google. Because that website had not been updated in many months, VCOG took points off for outdated or incomplete data and the city received an F. After the Hopewell city manager’s office alerted VCOG to the problem, VCOG issued a press release with the corrected grade of C+.

We were eager to issue the release. Eager because it gave VCOG the opportunity to demonstrate by deed and word that the primary objective of the survey was to create a dialogue about the best way to present and make accessible the current annual budget. The city called, we figured out where we went wrong, and we worked together in issuing the press release. It was beneficial to all to be honest and forthright, not to stick to our guns just for the sake of sticking to our guns.

That’s why it was also difficult when we talked to localities like Louisa and Henry Counties. In Louisa, the link to the budget file was dead. We tried getting there other ways — through searches and other sets of clicks — but we could not get to the budget document any way at all. Without the budget, the county lost out on dozens of points and was given a failing grade. Had the link been working, the county most likely would have earned a C.

Similarly, in Henry County, there was the link to a budget document front and center on the website, which was great, but on every page of that document appeared the words “proposed budget.” Nowhere on the posted document or any other place on the website that we could find was any statement that budget had been adopted. Budgets often change from proposed to final, so we did not give credit to Henry County for having the current adopted budget online, nor did we give credit for the questions that hinged on the presence of that current budget document.

Similarly, in Henry County, there was the link to a budget document front and center on the website, which was great, but on every page of that document appeared the words “proposed budget.” Nowhere on the posted document or any other place on the website that we could find was any statement that budget had been adopted. Budgets often change from proposed to final, so we did not give credit to Henry County for having the current adopted budget online, nor did we give credit for the questions that hinged on the presence of that current budget document.

Individuals from both of these counties contacted VCOG and discussed the grade, and both were understandably aggrieved. Both obviously took transparency seriously. They made the effort to get the information to the public, but the effort proved ineffective. To be consistent, we could not give them the same credit as those who both made the effort and executed it effectively. (At left: The current Henry County website now prominently features the “adopted” budget.”)

Local budgets have to be adopted by July 1 of each year. VCOG’s survey was first taken in October (three months later) and reviewed in December (five months later). It may not seem fair to withhold credit for what may have been technical glitches, but the fact remains that the public was without an online option for viewing their budget anywhere from three to five months after its effective date.

We received especially thoughtful criticism about two measurements we took: searching vs. clicking, and public comment regarding the current vs. the upcoming budget.

Searching vs. clicking: VCOG’s survey put a lot of weight on the number of clicks it took to get to a budget: 10 points for one click, 8 points for two clicks, and so on. The survey also gave credit, but not as much (4 points), when the budget came up in the first page of search results on the locality’s home page search function.

This led one locality to offer the following comments based on a survey it conducted of its own website users just a few weeks before VCOG survey:

“[O]nly a third of users usually find things by clicking around (26%) or by using the category tabs on the homepage (9%). The vast majority used the list of departments (34%), the website’s search box (18%), or a third-party search engine (12%).”

I am impressed that the locality had given so much thought to how it presented budgets and other important government information on its websites. Some of the larger localities and those in more affluent, tech-savvy localities are doing the research and making decisions based on their results. That is how it should be (though it should be noted that 26% of a locality’s population is still a significant segment).

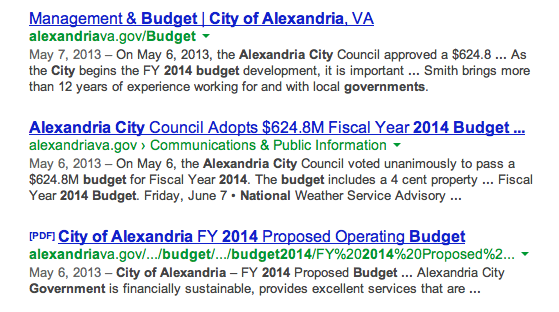

We did not account for third-party search engine results, and perhaps we should have. People are advised constantly to “Google” something and it is quite possible that a Google search would have brought up the budget in some localities whose site did not have a search box or whose results did not show up in the first page of results. (At right, the top entries on Google’s search results when the term “City of Alexandria budget 2014” is entered.)

Those localities whose websites are not as complex or who do not measure how their sites are used in such detail will need to weigh whether to emphasize searching vs. clicking. There are good reasons to make the budget easily accessible from the home page, but a locality looking to improve its website presence may decide that not enough of their users land on the home page to justify putting a direct link to the budget there. Again, these are the kinds of dialogues and conversations the survey hoped to provoke.

Public comment regarding the current budget vs. the upcoming budget: Several localities wrote to VCOG to tout the extensive effort they put in to gather citizen input during the drafting of the upcoming budget. They hold hearings and workshops, send out surveys and take comments online. At least one has created a budget simulation game that allows citizens to try their hand at creating a balanced budget. These are all fantastic ideas and VCOG applauds (loudly!) these proactive steps.

The localities who wrote to VCOG about this issue had an objection to our scoring: Why, considering all the outreach they do to collect citizen input for the upcoming budget, should they lose a point for not soliciting public comment for the current budget?

It is a fair question. My response was usually two-fold: (1) We had no reliable way to measure efforts put into developing the upcoming budget, and (2) citizen input on upcoming budgets is often based on reaction to or experience with the current budget.

Though some localities had a schedule of the budgeting cycle posted online by October or December when we conducted our survey, most did not, and we had no way of knowing whether they had plans to solicit public comment or when they planned to do it.

There is Code of Virginia provisions that says a budget must be adopted by July 1. There is not a statutory deadline for when citizen comment can or should be solicited for the upcoming budget. If we had picked an arbitrary date of, say, March 15, there would be localities who would be penalized simply for starting their process a day later on March 16.

On the second point, this survey measured the current budget. It is reasonable to assume citizens will look at the current budget to assess whether too much or too little is being raised or expended. This information can be important in forming opinions about the upcoming budget, e.g., “I don’t think you budgeted enough/I thought you budgeted too much last year for fire safety, so I think you should budget more/less in the upcoming year.”

As with the search vs. click issue, this is a good dialogue to have. The locality may not feel it is the right use of website real estate to provide a citizen-comment area for the budget, and that is their call to make. We think they should, but it is they who must make the decision that is best for their citizens.

Looking forward

Two dozen localities from all across Virginia contacted VCOG about the survey results. Some contacts were from localities that had scored poorly, but even more were from localities that had scored respectably and some that scored high. Some liked the idea of the survey so much (even when they didn’t score well) that they suggested other items VCOG could survey in the future.

I am especially excited about this prospect because I will now have contacts in local government to help craft any future surveys, identify important elements that should be included and winnow out those that should not.

I can’t thank all those officials enough for their willingness to hear our position, consider our opinions and to take our feedback seriously. VCOG is works as a coalition. And VCOG serves the public. We can do neither if we vilify localities without offering constructive criticism or solutions. We hope the survey will be part of a long and longstanding continuing conversation not just about budgets but also about access to public information in general.

Comments

One response to “Follow-up to VCOG’s “How Many Clicks?” Survey”

Open Checkbook

I believe the next survey/study should be: "How many counties/cities have open checkbooks?"

In other words, can citizens look at a particular section of a jurisdictions website and see all revenue and expenditures in an understandable way, much like a personal checkbook.

Thank you